Wag-Tail AI Gateway

Use any LLM without losing control. Enterprise-grade security, cost management, and high availability for your AI applications.

Cost Control

Token-level tracking and budget management across all your LLM providers. Set quotas per user, team, or application and get real-time alerts before costs spiral.

- Real-time token usage dashboards per model and team

- Budget alerts and automatic throttling at threshold

- Cost-per-conversation tracking and optimization

- Up to 70% cost savings with intelligent model routing

70% Cost Savings

Route simple queries to cheaper models and complex ones to premium models automatically.

Enterprise Security

Comprehensive security layer between your applications and LLM providers. PII redaction, prompt injection protection, and full audit trails for compliance.

- Automatic PII detection and redaction before LLM calls

- Prompt guardrails to prevent injection attacks

- Complete audit trails for GDPR, CCPA, and APAC compliance

- API key management with HashiCorp Vault integration

- Role-based access control with multi-tenant isolation

Security First

Your data never leaves your infrastructure. Air-gapped deployment available for maximum data sovereignty.

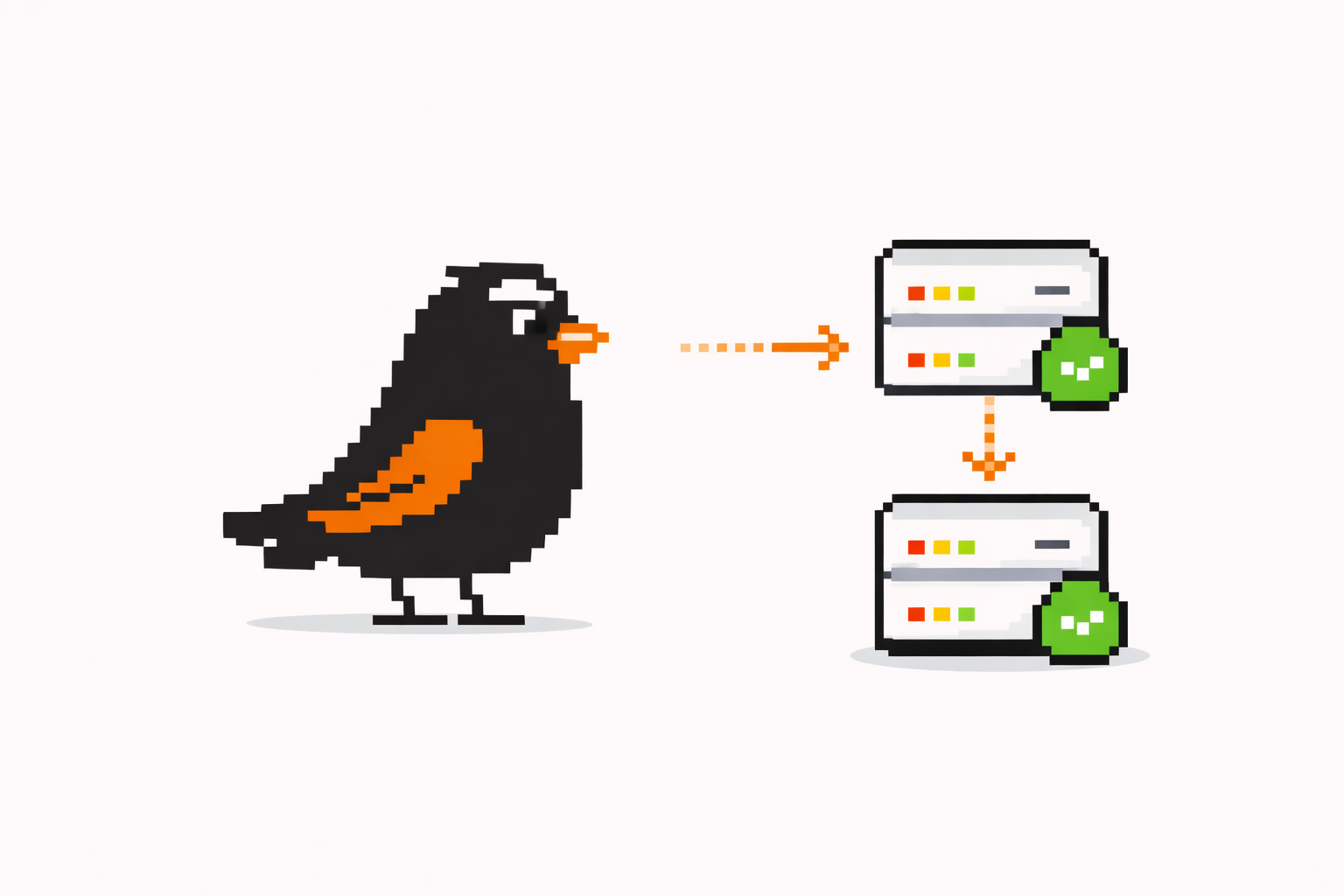

LLM Failover

Chain multiple LLM providers with priority-based failover. When one provider goes down, traffic automatically routes to the next available model with zero downtime.

- Priority-based model chaining with automatic failover

- 99.9% uptime guaranteed through multi-provider redundancy

- Health checks and latency monitoring per provider

- Load balancing across multiple model instances

99.9% Uptime

Never lose a conversation. Automatic failover means your AI stays online even when providers have outages.

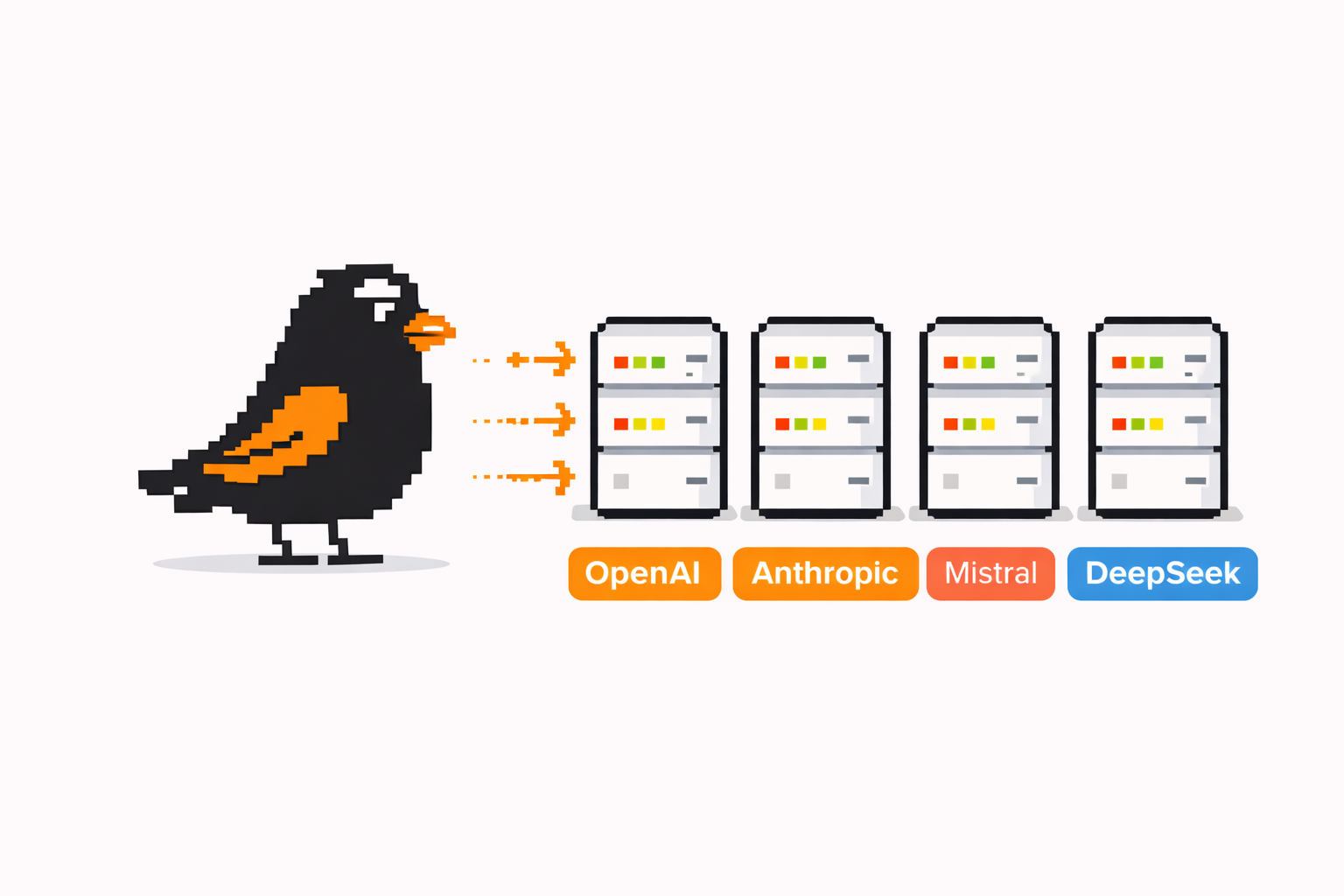

100+ Models Supported

Connect to any LLM provider through a unified API. Switch models anytime without code changes. From GPT-4 to Mistral to local Ollama deployments - all through one gateway.

- OpenAI, Anthropic, Mistral, Google, DeepSeek, and more

- Local model support via Ollama, vLLM, and TGI

- Azure OpenAI Service integration

- No vendor lock-in - switch models with a single config change

Unified API

One API endpoint for all models. Your applications never need to change when you switch providers.

Ready to take control of your LLM infrastructure?

Talk to our team about deploying Wag-Tail AI Gateway in your environment.